Behold alpacas, CrowdSec 1.2 is out and it comes packed with brand new features!

For this version, we set the spotlight on notification plugins, new bouncers and a completely reworked consensus engine.

Notification plugins

CrowdSec is now able to notify third-party services when an alert occurs or a decision is taken (configured at the profile level).

1.2 comes with Splunk, Elasticsearch and Slack native support, while the generic HTTP push module allows it to interface with more or less any other service that exposes an HTTP endpoint.

This enables supercharging the existing application ecosystem with CrowdSec’s invaluable detection features. For example, alerts and decisions can be now pushed directly into Slack channels, while interfacing with Splunk and Elasticsearch will enable SecOps teams to quickly detect and react to attacks.

Our main driver was to ensure the community can easily create notification plugins without imposing languages or communication protocols.

So we went with Go plugins and gRPC open source high performance request-response protocol. This means that custom notification plugins can now be built outside of the CrowdSec ecosystem and do not necessarily have to be written in Golang. Additionally, notification plugins are using Go templates in combination with Sprig to allow for powerful transformation and formatting of events and alerts. Stay tuned for new iterations and do not hesitate to share your feedback or drop us a line on your needs.

New bouncers

CrowdSec 1.2 also adds new bouncers: Cloudflare and Nginx bouncers are now available as packages in our repository.

Consensus V2

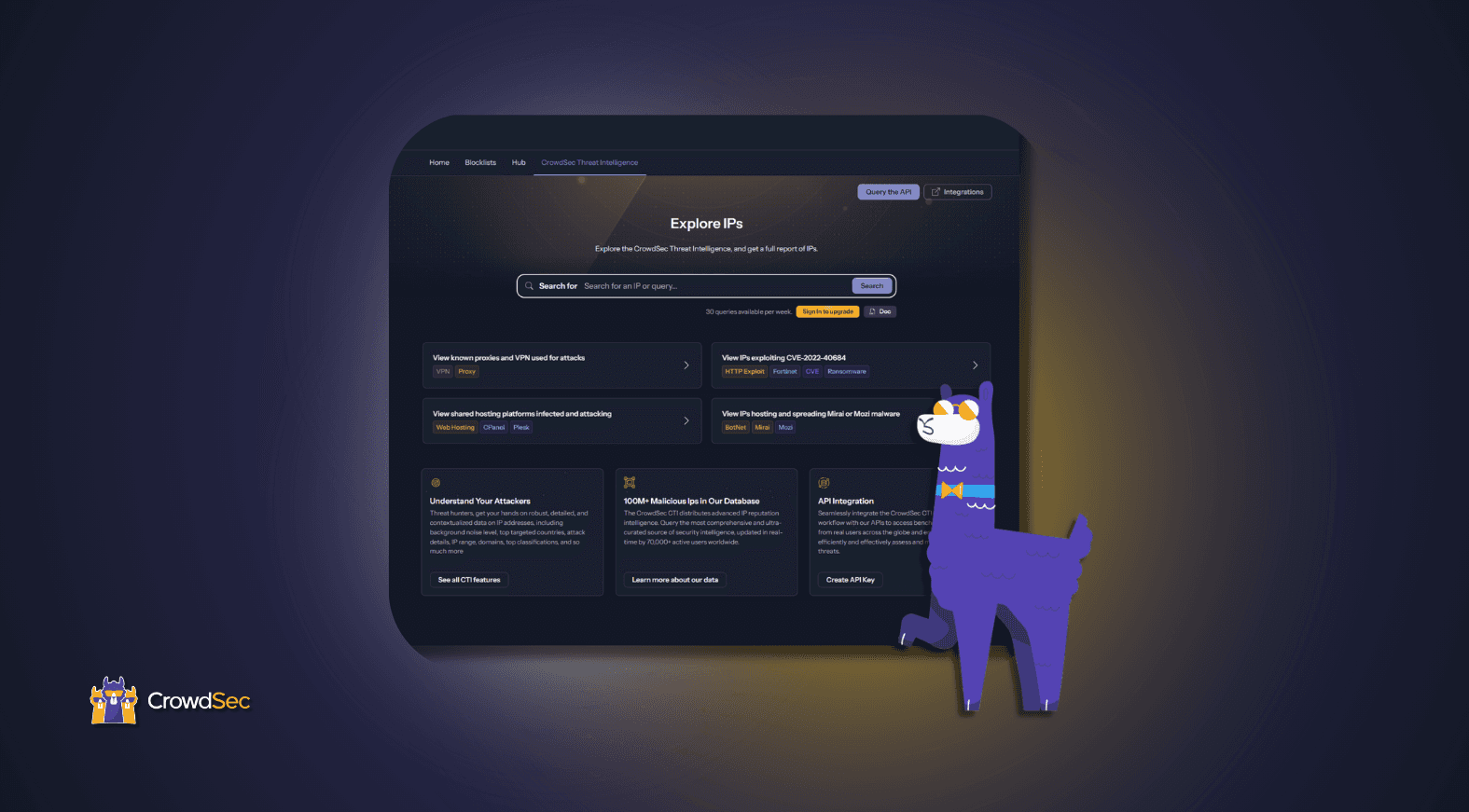

It is no secret that most IP reputation databases are suffering from false-positives and poisoning, making them less reliable, if not utterly useless.

At CrowdSec we made it our mission to build a solution that is fully reliable, false-positive and poison-free. Until now, we chose to distribute only IPs coming from our honeypots to ensure confidence and reliability of our information.

Entering the new consensus

As of now, thousands of malicious IPs shared by the community are redistributed to prevent attacks. How do we make sure signals from the community are reliable? Our secret is reputation.

Each user (or “watcher” as we call them) gets a “trust rank” or reputation, based on various criteria such as:

- Regularity of the watcher in sharing signals or IPs with CrowdSec.

- Consistency of the shared information (when compared to other users data and CrowdSec consolidated statistics).

- Correlation of shared data with CrowdSec honeypots and high reputation watchers.

- Cross-check with third-party services, as it is good practice to enrich the consensus process with information from other sources, if available.

Finally, we also take into consideration the AS (Autonomous System) numbers linked to the IP. Some of the ranges are well-known to be the source of massive and persistent aggressive behavior.

CrowdSec exclusively relies on high reputation user signals to make sure the IP base contains no false-positives and poisoned IPs. The goal is to drastically increase the cost and difficulty for a malevolent user to participate in the consensus and making our database reliable.

We are very selective and strict in which IPs we distribute to the community. For example, amongst the 700,000+ IPs in our database, only 2% will make it to the community blacklist. That is how serious we are about data quality!

At this point, I would like to share with you two thoughts.

1. Consensus is a key to our common success.

Hence, this is just the second iteration and more will come. Our ultimate goal is to share most of the IPs that are reported to us, not just a fraction.

2. Open sourcing consensus algorithms.

We discussed it a lot internally, but long story short, we will very likely do it. However, our goal is to move very fast on this development and be extremely agile, which is not always aligned with the “open source code quality chart”. So bear with us for now.

We do, we rinse, we repeat, enhance and find the right balance. When this work will have endured the test of time, we will open-source it so that you can comment, adapt, push MRs, PRs, etc. If you have some expertise in this precise field and want to be involved before we open source it, feel welcome to give us a shout, we will be delighted to learn from your own experience and share the inner gear work of the current consensus.